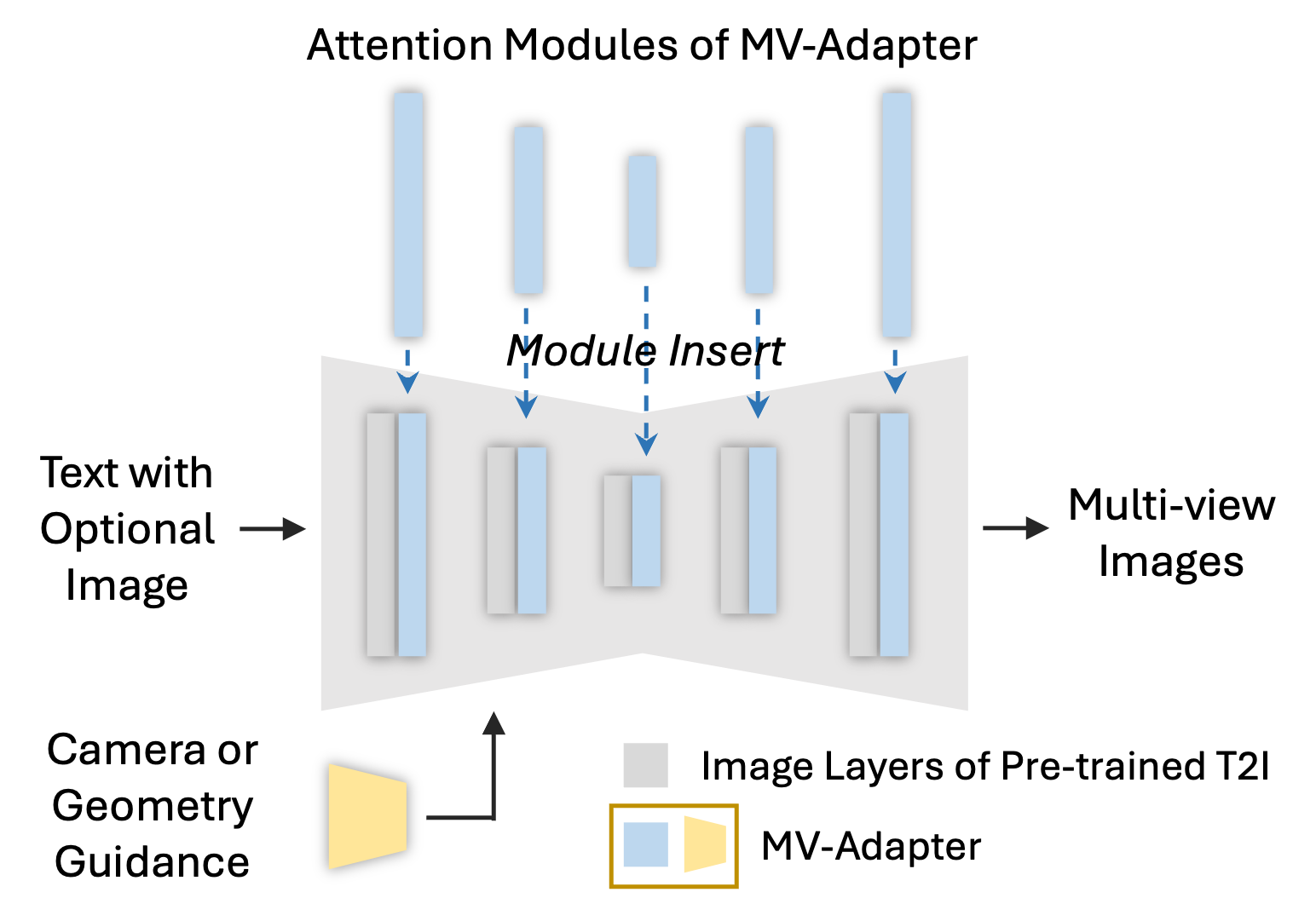

Generating multi-view images of an object has important

applications in content creation and perception. Existing

methods achieved this by making invasive changes to pre-trained

text-to-image (T2I) models and performing full-parameter

training, leading to three main limitations: (1) High

computational costs, especially for high-resolution outputs; (2)

Incompatibility with derivatives and extensions of the base

model, such as personalized models, distilled few-step models,

and plugins like ControlNets; (3) Limited versatility, as they

primarily serve a single purpose and cannot handle diverse

conditioning signals such as text, images, and geometry. In this

paper, we present MV-Adapter to address all the above

limitations. MV-Adapter is designed to be a plug-and-play module

working on top of pre-trained T2I models. This enables efficient

training for high-resolution synthesis while maintaining full

compatibility with all kinds of derivatives of the base T2I

model. MV-Adapter provides a unified implementation for

generating multi-view images from various conditions,

facilitating applications such as text- and image-based 3D

generation and texturing. We demonstrate that MV-Adapter sets a

new quality standard for multi-view image generation, and opens

up new possibilities due to its adaptability and versatility.

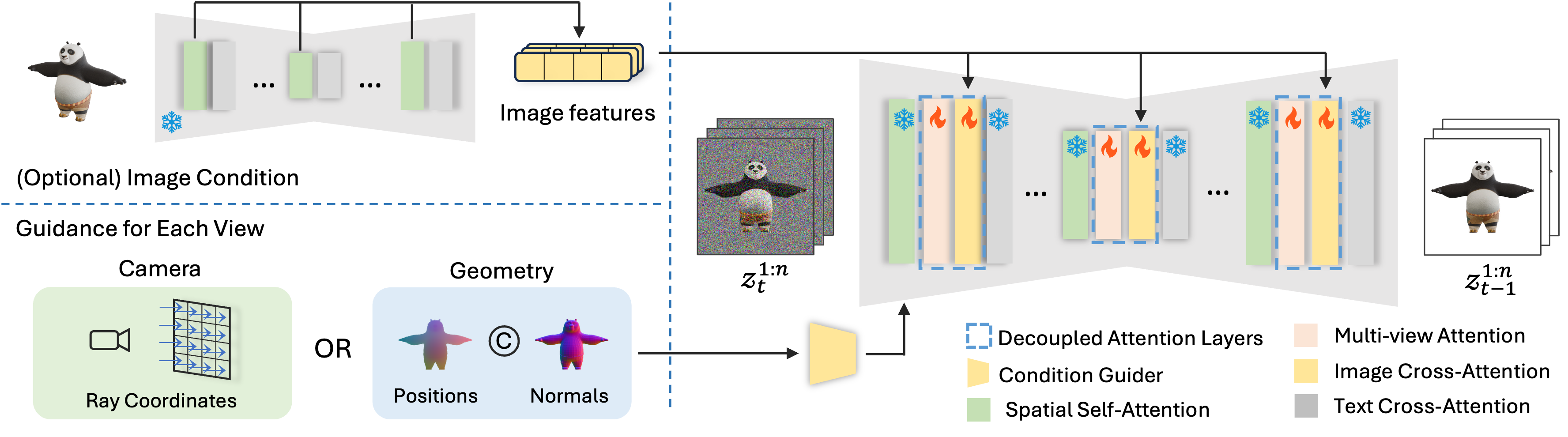

Our MV-Adapter consists of two components: (1)

a condition guider that encodes camera condition or geometry

condition; (2) decoupled attention layers that contain

multi-view attention layers for learning multi-view consistency, and

optional image cross-attention layers to support image-conditioned

generation, where we use the pre-trained U-Net to encode the

reference image to extract fine-grained information.

Our MV-Adapter consists of two components: (1)

a condition guider that encodes camera condition or geometry

condition; (2) decoupled attention layers that contain

multi-view attention layers for learning multi-view consistency, and

optional image cross-attention layers to support image-conditioned

generation, where we use the pre-trained U-Net to encode the

reference image to extract fine-grained information.